It has been impossible to miss: AI, a once obscure and somewhat futuristic idea, has become a houshold name. Mainstream news outlets like the BBC are publishing multiple articles on AI per week, and some are even incorporating it into their news production. The medical field has seen as similar growth: whereas 2020-2021 saw a mere 9,407 publications (as per Pubmed), the 2024-2025 timeslot saw an incredible 38,004 publications, and the year isn’t even over! At the same time, for many, AI, has come to mean LLMs or even more specifically brand names such as ChatGPT, Grok, or Claude – something immediately visible in the amount of articles where the “impact of AI on a certain field” is equated with the impact of ChatGPT on that field.

Given the rise in the popularity of AI, it is unsurprising that we’ve seen various parties scramble to come up with all sorts of implementation guidelines and legislation.

A Catholic perspective

The Catholic Church, in return, has published Antiqua et Nova. After giving what I believe to be the best technical description of AI for a lay audience, the document continues to offer guidelines for the development and implementation of AI: we must ensure it “always supports and promotes the supreme value of the dignity of every human being and the fullness of the human vocation”. Central is the idea that AI might produce human-like results, but is unable to engage in relationships or carry moral responsibility. In other words: AI will never be human and we shouldn’t try to replace ourselves (and even less, God) with it.

The text does, however, recognise that AI, for all its faults, may also have benefits to offer the world. So, in the weighing of those dangers and benefits, I believe we need to make our voice heard as Catholics, as we must be “ambassadors of Christ”. Ideally then, we should know about new innovations before they arrive, so that we have something to say when they do.

Our role

While looking into a crystal ball to predict the future is explicitly outlawed by the Church, we do have other ways of predicting the future of AI that don’t require confession: the so-called “Gartner hype cycle”, a report published yearly by Gartner, Inc., an American research and advisory firm, to provide their clients with an insight into the maturity and adoption of novel technologies. While many technologies don’t fit neatly in Gartner’s famous trajectory (e.g., because they dissappear entirely, lack fitting implementation, or aren’t even spotted by the analysts), it remains a popular way of appraising trends. So let’s do that!

Our goal here is to be ahead of the curve, so I’d like to highlight two technologies that Gartner says are in the “innovation trigger” stage, i.e., the very beginning of relevance: Embodied AI and Neurosymbolic AI.

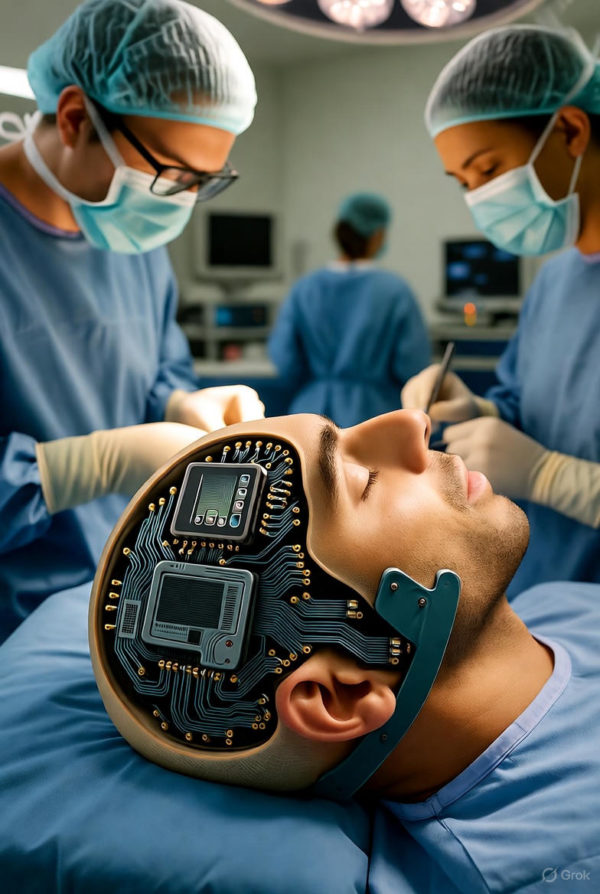

Embodied AI

The Catholic Church teaches that the body and soul are one, and unsurprisingly then, Catholics don’t necessarily agree with the Carthesian dualism that our modern world is imbued with. The idea that our physicallity might be an innate part of our intelligence, is what underlies Embodied AI (i.e., E-AI). It proposes a system that consists of hardware to collect data and act on the world, and software that takes that data, learns from it, decides a course of action, and uses that action to (autonomously) collect more data. If its proponents are to be believed, this might prove the next step in Artificial General AI (i.e., AGI). Permitting myself a little speculation, I see semi-autonomous smart dust (a swarm of miniscule robots all interacting with eachother and their environment) providing biofeedback on gut health or an autonomous Internet of Things (IoT) making the hospital building “come alive” to regulate its light, temperature etc. all by itself.

Neurosymbolic AI

Crucial to the implementation of AI in medicine are trust and explainability. One way to achieve a higher degree of explainability is neurosymbolic AI in which “old” symbolic (i.e., logic-based) AI and “new” AI (e.g., deep learning (DL)) are combined to create a hybrid system. Such a system may take many forms: using a DL system as an input to a symbolic system, using a symbolic system instead of normal nodes within a DL system etc. but the result is often more generalisable, scalable and transparent than the forms of AI we have become accustomed to. An example close to my heart is a project I’m currently working on: using DL to describe a skin lesion, and using a symbolic reasoning system to come up with a fully explainable diagnosis.

ConclusionThere are a plethora of other technologies that are equally promising – wetware (building computers from organic material), causal AI (using AI to find causal relations), first principles AI (AI that reasons using approved steps, starting from a base of approved knowledge) – forms of AI with potential medical applications. Now, each of these are attempts to “humanise” AI, whether it be placing the AI in a physical environment, or taking inspiration from human “two-system thinking”. Of course, our foremost duty as medical professionals is to ensure that AI, if implemented, improves the clinical outcomes of our patients, but our duty as Catholics goes further: I believe we ought to be guardians of humanity in medicine, to ensure we remain visible and responsible, lest our profession will become that famous catchphrase: “I’m sorry … computer says no”.

LinkedIn: https://www.linkedin.com/in/mel-visscher/

Fittingly, the image was generated using XAI’s Grok.

Mel Visscher